Divide and conquer everywhere

Advantages of splitting your software solution in smaller pieces

Introduction

Anyone who studied algorithms might be familiar with the concept of divide and conquer whereby splitting a problem and resolving a small set (recursively) and afterwards combining the result of all the pieces, a big, difficult task can be easily solved. This can also be extrapolated to the concept of trying to split a big problem into smaller, more manageable and easy to tackle tasks when you are analyzing your product backlog. This is not a new paradigm. In fact, it is often applied in the arenas of politics, sociology and psychology. In this strategy, one power breaks another power into smaller, more manageable pieces, and then takes control of those one by one.

The scenario

In a recent project for a well known Italian newspaper, we were in the need to refactor a process in charge of analyzing and populating all the information of the newspaper edition. On a daily basis a content team was responsible for the digitalization of all the edition’s articles, images, pages, position within a page where an article is placed and much more. All this information, internally mapped as an XML hierarchy, was packed in a huge zip file that after being processed could be consumed by many other applications, such as a PDF viewer app for mobile phones.

This process of analyzing and populating the XML hierarchy inside the zip file was complex, error-prone and time-consuming. It consists basically on 4 steps:

- Downloading, extracting and analyzing the information inside the zip file

- Saving all this information to a DB

- Generating the newspapers images to be consumed

- Populating an index to enable a fast searching engine

To make things even harder, we also needed to consider the possibility of running multiple processes at the same time, since a bunch of zips must be populated initially (about 800 zips processed at the same time). Using a serverless approach seemed a good idea in order to make this process scale as needed, but is that enough? Some questions arise:

- Who are the stakeholders of each part of the process?

- Could it be that different persons must be informed depending on the type of error and their role?

- Since there are 4 clear steps, do we need to rerun the whole process in case of failure?

As we supposed, after some digging with the customer, we found out that there were different actors for each part of the process. For example, the owner of the data inside the zip was the content manager, the owner of the DB and the searching engine was a system administrator and the owner of the image generation was a digital manager.

The architecture

In this scenario, we realized that decoupling each part of the process would have been very useful. Having independent developments for each process gives us not only a great way to customize every task as desired, but also lets the developer choose the best language to efficiently resolve the task. For example, picking Node.js for downloading, extracting and analyzing the zip file by using streams handles any possible problem of the AWS lambda limitation for temporary space to do the job. But also we were able to decide to use the GD library to manipulate the images on PHP since it’s a very well known tool in Spark.

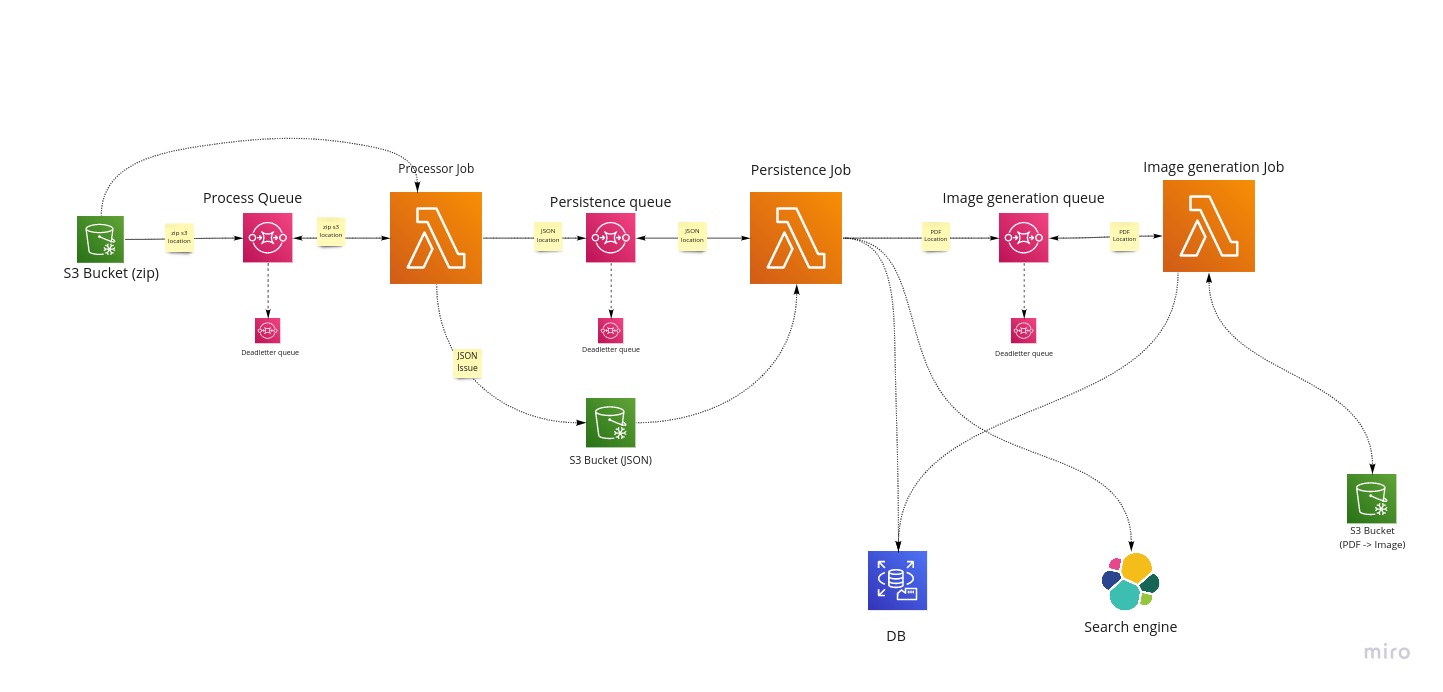

The architecture looks more or less like this:

All three lambdas were developed in PHP and deployed to AWS using Bref combined with Serverless framework, great tools to configure and deploy serverless applications in PHP. Continuing with the PHP topic here at SparkFabrik we are Laravel fans since its architecture provides great performance, it’s easy to build quality code on top of it, provides great developing tools such as Artisan and also it is supported by a big community. Being that said, we believe that going for a full web framework was like trying to kill a mosquito with a bazooka, so we decided to adopt Lumen the Laravel little brother (but not less bright) whose purpose is to help on the microservices architecture. And that’s exactly our use case.

The communication between each lambda task was done using SQS queues in order to gain fault tolerance in every step. This was achieved by configuring the Serverless framework properly, defining an event on those queues. On a message arrival, AWS triggers an SQSEvent on the corresponding Lambda to process all the newspaper information using all the advantages of Lumen and Eloquent ORM. In case a more complex orchestration is needed, AWS Step functions would be another great tool to use to decide which task goes next.

Conclusion

Splitting a big, complex problem into smaller ones is a great enabler for problem-solving. It allows focusing on more manageable tasks, not taking care of what happened before and what will happen next. Taking this approach in your architecture design will not only let the team choose the best tools to develop a solution for each small problem, but also let you customize different business rules your customer needs.